TIME SYNC / FILM SLATE

For multiple purposes, we needed to find a way to sync multiple iOS devices together and send data between them. For time sensitive matters like synchronizing cameras and audio during shoots, we came up with a method to reliably sync devices clocks.

As Apple doesn’t actually allow apps to change the system clock’s behavior, we came up with our own NTP syncing implementation using algorithms described here and here. As a short rundown, we send a total of 300 packets via the MultiPeer Connectivity framework and use these to gather a mean deviation between both devices. This is stored locally on the device that initiated the sync, allowing for multiple devices to sync to one “master” device/time.

Please note that syncing has to happen one device at a time due to the nature of how our framework handles the packet exchange. We may add batch syncing at a later time.

After this we can send timestamps at which the slate should fire to and from all devices and execute the trigger function at that point in time.

Current bugs:

SwiftUI handles @Published UI updates in a way we do not quite understand yet. This leads to the first trigger occasionally being offset. While all devices show the same time throughout the call of the function, SwiftUI delays toggling between the standard and “triggered” screen. As this may lead to confusion among users as to whether the devices are in fact synced correctly, we are looking into this.

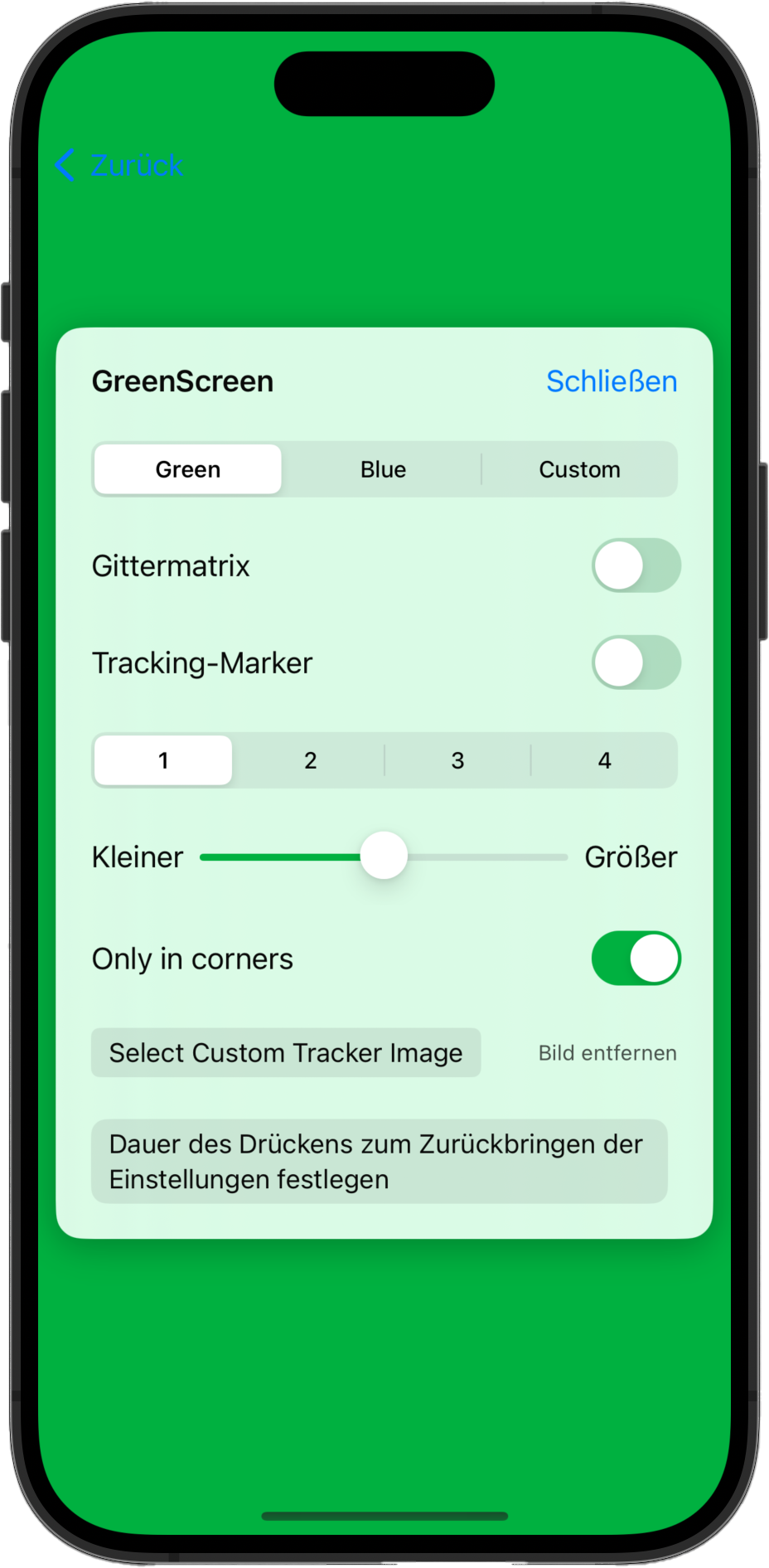

GREEN SCREEN

Synchronizing cameras and audio during shoots, we came up with a method to reliably sync devices clocks. As Apple doesn’t actually allow apps to change the system clock’s behavior, we came up with our own NTP syncing implementation using algorithms described here and here.

As a short rundown, we send a total of 300 packets via the MultiPeer Connectivity framework and use these to gather a mean deviation betweenboth devices. This is stored locally on the device that initiated the sync, allowing for multiple devices to sync to one “master” device/time.

Please note that syncing has to happen one device at a time due to the nature of how our framework handles the packet exchange. We may add batch syncing at a later time.

VIRTUAL CAMERA

When we set out to develop the virtual camera tool for our app, there were basically only 2 apps that recorded video and 3d camera data. But we felt they were both missing some features that Apple was exposing through their ARKit API, so we decided to give it our best.

DISCLAIMER: Our virtual camera tool is still in development..

So, what were we missing you might ask…

For starters, we wanted 4K support, as no other app was offering this, even though it has been supported by ARKit for well over a year. But to be fair, Byplay has implemented this in the paid tier of their recent big 2.0 release as well..

Next, we wanted to add multi device support, so if multiple angles of a scene are filmed, VFX could be added stress free. For this we use ARKit’s ARImageAnchor to detect a surface and set the world origin to its position. After this, we use our Syncing protocol as described here to start and stop all devices at once. In post-production it’s as easy as importing all .dae files into your scene and tada; position- and time-synchronized cameras are ready to go!

During development we found Apple exposes depth maps from LiDAR enabled devices, so these can also be recorded for quick roto previews or other depth related effects.

Another quirk we found was that ARKit doesn’t actually deliver real life rotations but moves within a -180 to +180 degree range. When going over this angular limit, ARKit

DISCLAIMER: Our virtual camera tool is still in development..

So, what were we missing you might ask…

For starters, we wanted 4K support, as no other app was offering this, even though it has been supported by ARKit for well over a year. But to be fair, Byplay has implemented this in the paid tier of their recent big 2.0 release as well..

Next, we wanted to add multi device support, so if multiple angles of a scene are filmed, VFX could be added stress free. For this we use ARKit’s ARImageAnchor to detect a surface and set the world origin to its position. After this, we use our Syncing protocol as described here to start and stop all devices at once. In post-production it’s as easy as importing all .dae files into your scene and tada; position- and time-synchronized cameras are ready to go!

During development we found Apple exposes depth maps from LiDAR enabled devices, so these can also be recorded for quick roto previews or other depth related effects.

Another quirk we found was that ARKit doesn’t actually deliver real life rotations but moves within a -180 to +180 degree range. When going over this angular limit, ARKit

simply flips back to the other side. In post, this means random smeary frames if mo-blur is turned on during rendering, as the camera rotates by almost 360° from one frame to the next. Sadly, many other apps don’t take this into consideration.

Finally, we wanted image stabilization, something that ARKit doesn’t offer, because it needs to use raw camera data in tandem with sensor readouts for tracking. Luckily, we found a workaround by implementing GyroFlow support. Every Recording comes with a sidecar .gcsv file containing accelerometer, gyro and magnetometer data. After rendering out all your 3d elements, you can use this data to stabilize everything to your liking.

Other QoL features include h.265 support for smaller file sizes, HDR video and LiDAR reconstructed meshes.

Sadly, some things are out of our hands, as Apple only allows limited control over the AVCaptureSession which is responsible for audio and video during an ARSession.

Things we hope improve with future iOS releases are:

– Audio quality

– LiDAR mesh fidelity

– LiDAR depth maps (limited to 256×192)

– Inconsistent Readouts of Sensors on some os versions

Current bugs that we are looking into:

– Excessive Autofocus bursts in sub-optimal lighting

– Choppy video when device is under a lot of stress

– Random sensor readout break-offs.

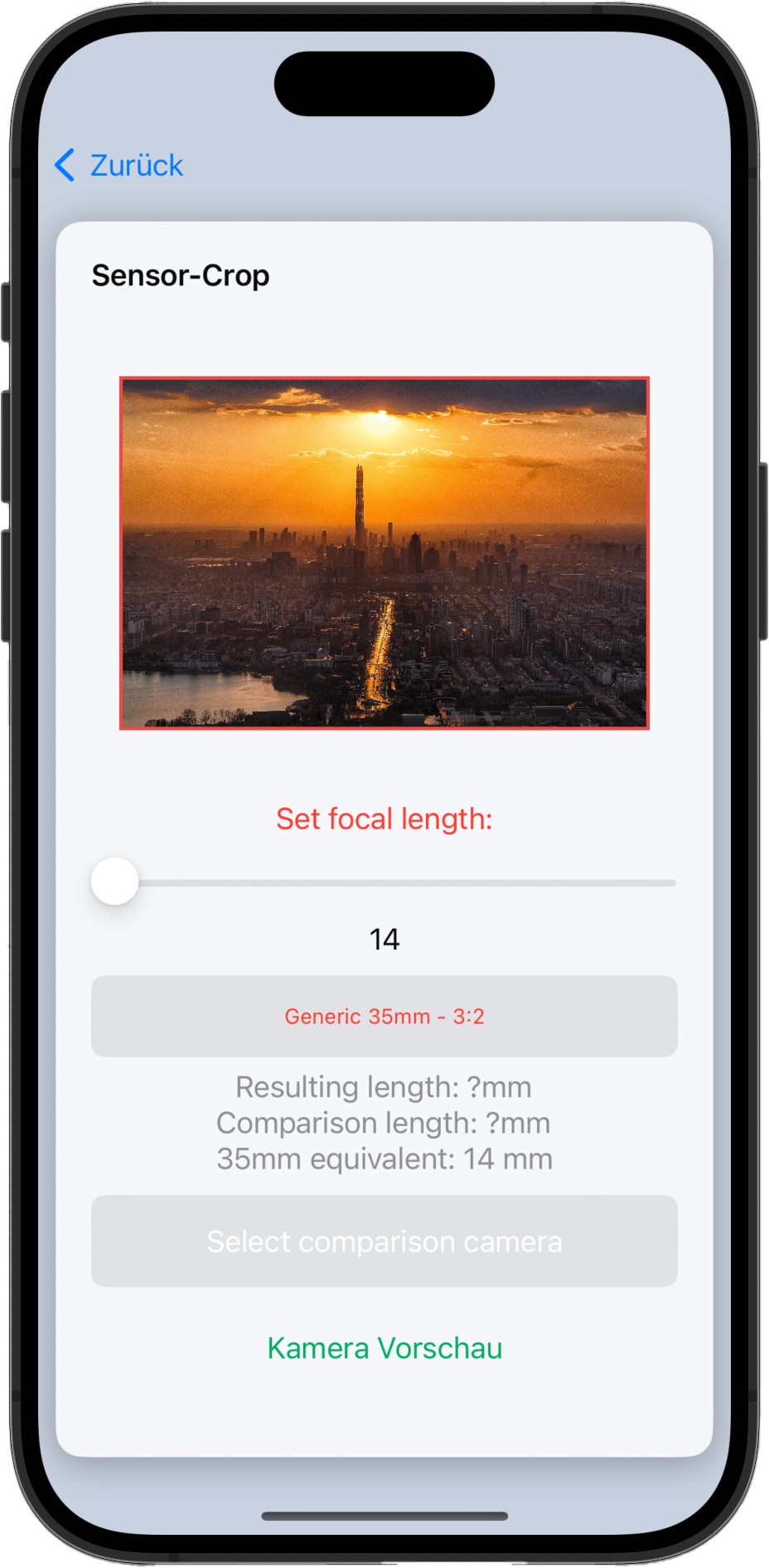

DIRECTOR'S VIEWFINDER / SENSOR COMPARISON

Often when preparing for a video shoot, directors or cinematographers will be faced with the question which camera to use for which shot. Director’s Viewfinders are a handy tool that help with this decision, so we decided to incorporate one into our app's ecosystem as well.

Additionally, we fancied a tool that would let users compare cameras to each other to see how their respective field of views matched up. The result is a DVF we are rather proud of. It detects the users device and adaptively renders a representation of what the selected camera would see.

Additionally we provide a sensor comparison and inform the user of the focal length divergence between any 2 camera models/modes. This allows for a swift evaluation of equipment and will speed up production planning.